Projects

2020-2022

wya

I built wya with a friend so we could better keep track of our friends. How many times have you travelled to a city and not known who was around? How many times have people you know come to town and you’ve missed each other because you had no idea? How many times have you happened to overlap with someone in a city without realizing it?

wya is our attempt to avoid repeating these extremely avoidable scenarios. Think of it as a city-level Zenly. Fun and useful, but without the undercurrent of creepy surveillance. Currently in TestFlight!

Fig Finance

Fig was a side project I started with my best friend to address the growing discrepancy between financial literacy and the accessibility of investing. We wanted to create a “Bloomberg terminal for Robinhood users”. Ironically, while Robinhood has done a lot to democratize investing, they’ve done almost nothing to increase financial literacy, resulting in amateur investors losing a lot of hard-earned money. We built a dashboard that offered investment data in a simple, clean interface.

The other arm of Fig was an automated trading platform, which scraped the positions that Wall St.’s top analysts were taking and made trades based on those positions in real-time. We consistently beat out the S&P 500 (peak annual returns ~105%).

Speech-to-speech voice conversion

“aud.io” is an automated system for speech-to-speech voice conversion. Want to speak as Spongebob? Donald Trump? Ellen Degeneres and more? Look no further.

You simply speak into the microphone and out comes the same audio, only your voice is now that of your selected speaker. It can learn new output with a day of training and is robust to pretty much any input you throw at it. Its technology is bordering on the frontier of what is out there and is certainly the only readily implementable platform for social purposes. Accepted into the online accelerator Pioneer.

2019

Analyzing rideshare data

I explored the possibility of developing an app that would help rideshare drivers receive bigger payouts for their rides. Since drivers are constantly shifting between rideshare services (always trying to maximize their profits based on surge prices, percent payouts, and general pricing models for each service), I thought to build an app that would put this maximization problem into a quantitative framework. Given the time of day, the driver’s location, and other factors (like the weather), which service would be most likely to be most profitable? While the idea had potential, my analysis of the data ultimately proved there to be too much variance for the service be reliable.

Modified labeling system for DeepLabCut

The DLC labeling process for whiskers can be laborious and repetitive. This alternative to DeepLabCut’s point-and-click labeling allows for rapid labeling of contiguous structures, like whiskers. In this new system, the user drags the cursor along each structure, after which the spline is discretized into a pre-set number of nodes with equal increments along the path. This modification increased our throughput from labeling a frame every minute (at least) to labeling a frame every few seconds. I was very pleased when the original authors of the software at Harvard asked if they could include my code in their latest, official version.

2016-2018

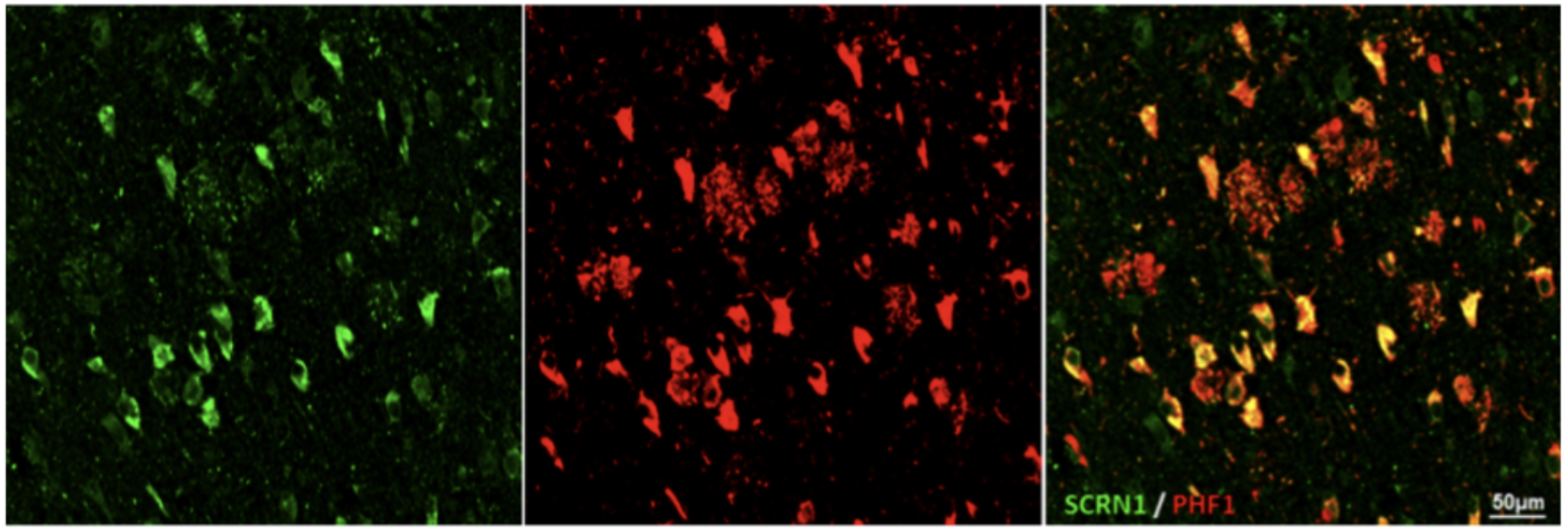

Discovering a new protein in Alzheimer’s disease

I spent 2 years of my undergraduate career working in the lab of Dr. Thomas Wisniewski, who is famous for having co-discovered the role of APOE in Alzheimer’s disease. The goal was ambitious: we were on a journey to discover a protein that had never before been implicated in the disease. Through immeasurable hours of hard work we were ultimately successful in this pursuit and the outcome of our project has been incredible. Our results were published and have been read more than 90% of all publications from 2019 (despite being published in its final month!), the conference presentations we delivered received several major awards, my thesis won a university-wide award, and we were able to blow the doors open to a completely new therapeutic target! At several stages of the project I was able to incorporate my computational interests as well. I was incredible lucky to work with such a great team (Dr. Eleanor Drummond and Geoffrey Pires) and I couldn’t be more proud of the outcome!

Modeling information transfer in high-noise neural systems

This program was the first part of my Final Project for Dr. Alex Reyes’ course, Network Models. My goal was to determine how information dissapates throughout a network. To do this, I developed a generative model that creates a population of excitatory neurons that synapse onto each other using a probabilistic mode based on the proximity between the two units. The position of the neuron was generated using a Gaussian distribution with parameters in arbitrary units for each of the 3 dimensions. Then, the physiological properties of each unit were generated using the Hodgkin-Huxley equations, and stochastically fire for the duration of “recording” based on a model I built from a previous assignment. One of these HH units was then selected at random and given a stimulation of a set pattern. The goal of this project was to see how many units in the network show significant alignment with this stimulus (i.e. received the information), and how that decays with distance and with number of units.

Motion tracker for fruit fly larvae

![]()

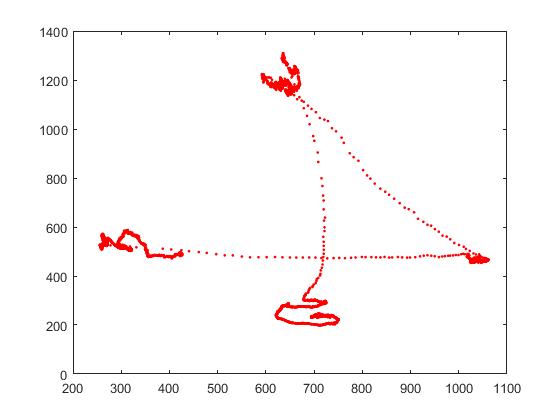

This project was done during my time at University of Michigan for Summer 2017, in the Collins Lab as a National Institute of Health BP-ENDURE Scholar. Researchers in the Kuwada Lab were studying the effects of various intervention types on larval locomotion, and had been using ImageJ’s tracking software, which was rife with errors and caused many frustrations. I approached one of the researchers and mentioned my interest in creating a tracking software, and she was more than happy to offer their database of videos in order for me to try.

A brief summary of the algorithm: the program iterates through each frame of the video, and starts by binarizing and processing the given frame to ease the object detection of the larvae. From there it computes the borders of all objects within a given size constraint (tailored specifically to the area of a larva), and calculates the centroid of those objects. It then stores the pixel coordinates of each detected object in a matrix. After having calculated all the coordinates of all the centroids from each object for all frames, it asks for the user to select the paths for each of the larvae, so as to label the coordinates stored in the coordinate matrix (and to avoid Kalman filtering and other slow algorithms for inferring to which larva each coordinate pair belongs). It then iterates through each larval matrix and calculates the Euclidean distance between each object’s centroid in consecutive frames for all frames, and stores those distances in a CSV file. Furthermore, it detects whether a larva stopped (paused in its motion) or hit the wall of the dish, and provides a notice in the CSV for all of those events. This was a super fun project and I feel grateful that I was given an opportunity to pursue it.

Tracking and analyzing eye movements in real-time

Despite our experience of perceptual stability, our eyes are constantly in motion, darting around in subtle movements called saccades about three times a second. Saccades are used to sample important visual information from our environment, by quickly directing the fovea to relevant stimuli. Past studies have characterized perceptual changes that occur during saccade preparation, yet the physiological mechanisms that drive such changes remain elusive. During my time at the Paradiso Lab, we attempted to characterize these mechanisms by delivering transcranial magnetic stimulation (TMS) to human subjects’ primary visual cortex, a region that has been implicated in saccade-based perceptual changes. We delivered rapid, single TMS pulses during a psychophysical discrimination task at various points relative to the saccade. In doing so, we attempted to abolish these perceptual changes leading up to saccades, and thus expose one link in the chain between physiology and perception. This project was incredibly ambitious and exciting, and I ended up devleoping a full-scale software package, recreating the entire psychophysical task from a past study by Dr. Marissa Carrasco and Dr. Martin Rolfs, interfacing with the eye tracking camera and TMS machinery, and developing algorithms to do on-line identification of saccades. The lab has plans to continue this project due to our promising, early results.

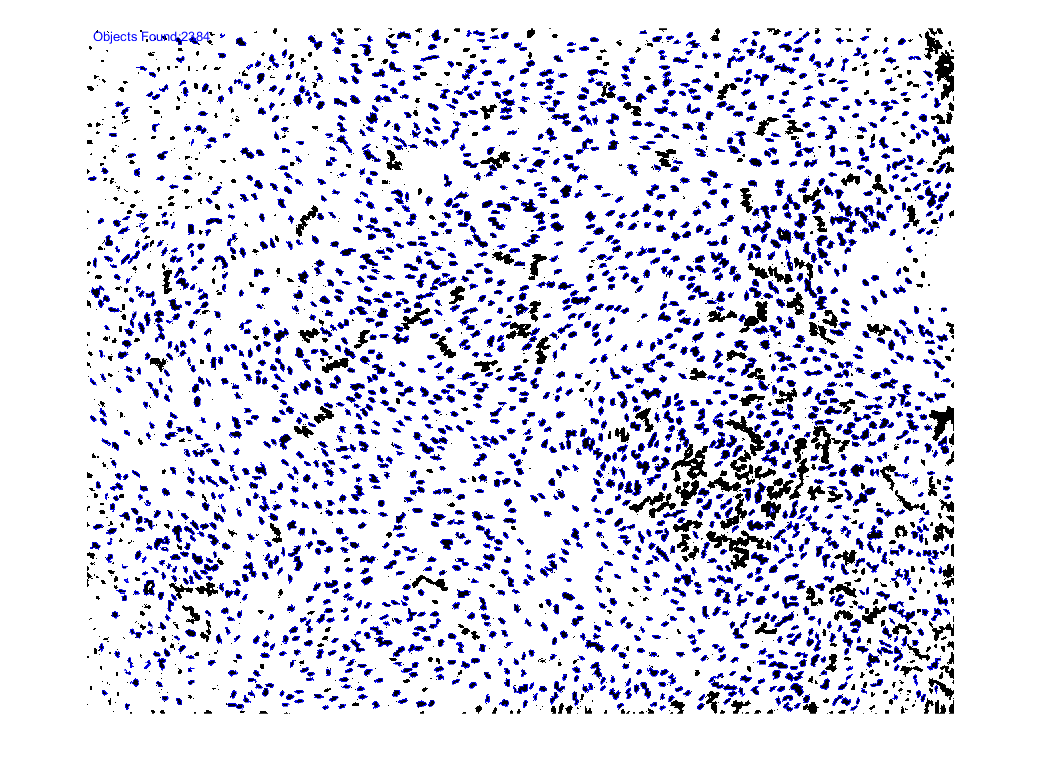

Automated quantification of cellular structures

I undertook this project in hopes of avoiding the incredibly monotonous task of manually quantifying thousands of cells for my research project. The program processes an RGB image (IHC stains are almost always some combination of red, green and blue channels) into a 3D matrix, and allows the user to input a specified channel (e.g. DAPI staining for nuclei (blue)) and input the size range of the desired object. It then makes minor contrast adjustments to the binary image so as to emphasize the edges of all enclosed objects, and outlines and stores the boundaries of all objects. The cell array containing all data pertaining to the boundaries is further processed to filter out the objects whose areas do not fit within the inputted size constraints. The remaining objects are outlined with the same color as the channel (yes, I had some fun with the aesthetics) and the number of remaining, bounded objects are counted. I also account for occlusions (such as clumped nuclei) wherein multiple objects that are too close together for the program to identify (as they exceed the size constraints for a single nucleus), are counted by dividing the area of each clump by the average area of the desired object, and adding that to the total object count. I also made a simple function for this program, just to make it a little simpler to use. This was one of my first software packages and I learned a lot from building it. I also want to quickly express my gratitude the postings of Mathworks user, Image Analyst, who helped me with contrast adjustments and thus helped me improve my code.